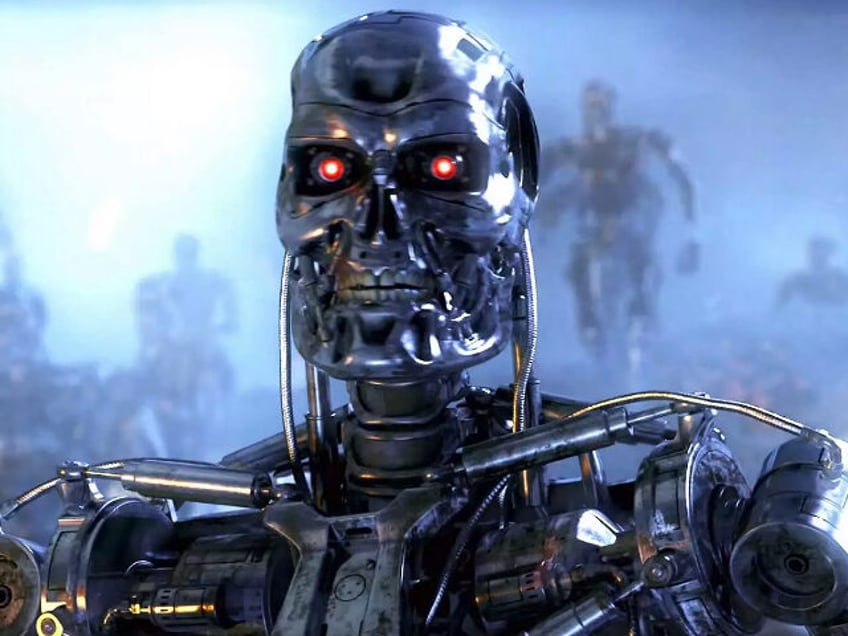

In the Terminator movies, Skynet became sentient at precisely 2:14 a.m., on August 29, 1997, leading to Judgment Day and the destruction of civilization. While real-life artificial intelligence technology still has a long way to go, James Cameron is predicting a similarly dire outcome for mankind.

The filmmaker said AI technology could lead to an “arms race” between rival nations followed by a global war run by algorithms that will resemble the Terminator movies, the first of which was released in 1984.

“I think the weaponization of AI is the biggest danger,” he told Canada’s CTV News. “I think that we will get into the equivalent of a nuclear arms race with AI, and if we don’t build it, the other guys are for sure going to build it, and so then it’ll escalate.

“You could imagine an AI in a combat theatre, the whole thing just being fought by the computers at a speed humans can no longer intercede, and you have no ability to deescalate.”

'I warned you guys in 1984,' 'Terminator' filmmaker James Cameron says of AI's risks to humanity https://t.co/LahbNn9TGY

— CTV News (@CTVNews) July 18, 2023

Cameron said he agrees with many AI experts who have recently come forward urging for regulation before the technology becomes a threat to society.

“I absolutely share their concern,” Cameron said. “I warned you guys in 1984, and you didn’t listen.”

The Oscar-winning director said AI currently isn’t advanced enough to even write a convincing screenplay.But that could change soon.

“Let’s wait 20 years, and if an AI wins an Oscar for Best Screenplay, I think we’ve got to take them seriously,” he told CTV News.

Cameron’s comments follow similar remarks made by Oppenheimer director Christopher Nolan, who said AI is about to reach an “Oppenheimer moment” — or a point of no return — and that people need to be “held accountable” for its development.

Follow David Ng on Twitter @HeyItsDavidNg. Have a tip? Contact me at