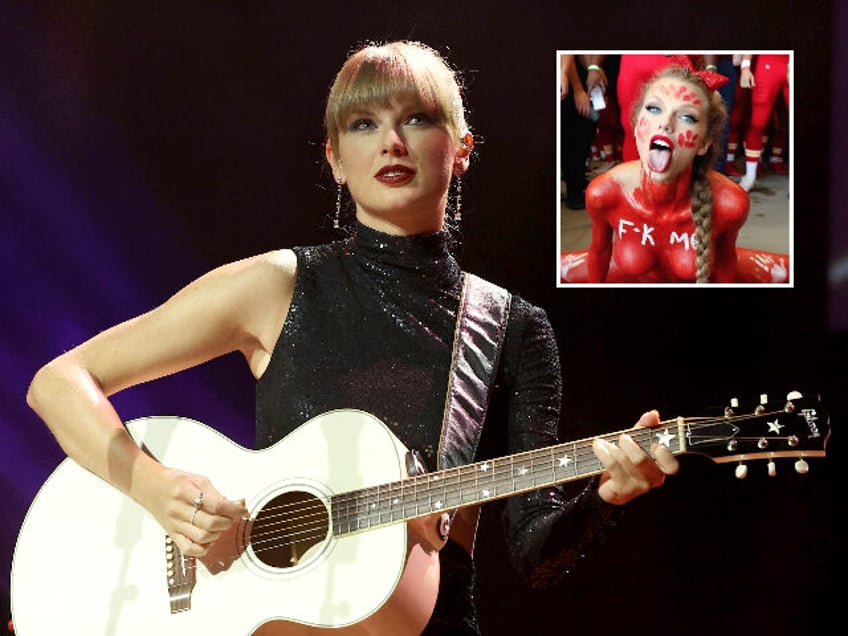

Fans are outraged after graphic AI-generated photos of pop star Taylor Swift went viral on social media. One image depicts the singer nude with Kansas City Chiefs-themed red and white body paint all over her, and “F*K ME” scrawled over her chest.

A series of sexually graphic Kansas City Chiefs-themed AI images featuring Swift — who has been a regular at Chiefs games over the last six months due to her relationship with the team’s star player Travis Kelce — have been circulating on social media, sparking outrage among fans.

“I unfortunately saw a few of those Taylor Swift AI porn photos, and whoever is making this garbage needs to be arrested,” one X/Twitter user reacted. “What I saw is just absolutely repulsive, and this kind of shit should be illegal.”

“Whoever is making those Taylor Swift Ai pictures is going to Hell (not her fan, but still),” another wrote.

X/Twitter

“Men are posting AI-generated images of Taylor Swift being sexually assaulted, and are asking for other more graphic images to be shared for their own enjoyment. They’re posting public comments about wanting to violate her. What the hell is wrong with this world?” a third lamented.

“Those Taylor AI pics are straight up sexual harassment and it’s disgusting that man can do those things without repercussions,” another echoed. “They see women as objects made for their sick fantasies and I’m so sick of it.”

“The situation with AI images of Taylor Swift is insane. Its disgusting,” another said. “Grown men are simping over artificially generated pixels of a celebrity. These grown mf are deprived of SEX. Please PROTECT TAYLOR SWIFT.”

Many social media users simply commented in all-caps, “PROTECT TAYLOR SWIFT.”

The images are reportedly being hosted on the deepfake porn website Celeb Jihad, which was sued by celebrities in 2017 for posting explicit images that had been hacked from their personal smartphones and iCloud accounts.

The graphic AI images of Swift are just the latest example of the rise in deepfake porn.

As Breitbart News reported, there is an ever-increasing rise of deepfake porn, which now makes up 98 percent of all deepfake images. Meanwhile, authorities say there is nothing they can do about deepfakes, the vast majority of AI-generated pornography targets women.

Moreover, so-called “nudify” apps, used to make deepfake porn, are soaring in popularity.

You can follow Alana Mastrangelo on Facebook and X/Twitter at @ARmastrangelo, and on Instagram.