Jennifer DeStefano said the voice was '100%' her daughter's

Cyber kidnapping scam victim warns parents of horrific AI ploys for extortion

Jennifer DeStefano, former victim of cyber kidnapping scam, tells 'Fox & Friends Weekend' about her shocking experience after having an interactive conversation with an AI bot that used her daughter's voice.

A cyber kidnapping scam startled one mom into believing her daughter was making a pleading call to her after being kidnapped, but it was all an illusion to not only con the family out of money, but potentially abduct her [the mother] as well.

"It was a back-and-forth," Jennifer DeStefano, a mom from Arizona, said Sunday on "Fox & Friends Weekend."

"She called me crying and sobbing. I asked her what happened. She said, ‘Mom, I messed up.’ I said, ‘Okay, what did you do? What happened?’ And then this man told her to put her head back, and then I got concerned. She said, ‘Mom, these bad men have me, help me, help me, help me.’ Then the phone fades off as this man gets on the phone and tells me, ‘We have her.’ It sounded as if the phone was being ripped out of her hand in that process of the conversation."

SCAMMERS USE AI TO CLONE WOMAN'S VOICE, TERRIFY FAMILY WITH FAKE RANSOM CALL: ‘WORST DAY OF MY LIFE’

A mobile phone passcode security screen is seen in this photo illustration in Warsaw, Poland. ((Photo by STR/NurPhoto via Getty Images))

DeStefano said she was pulling up to her daughter's dance studio when the call came through, explaining that the man's voice on the other end threatened to kill her daughter if she dared tell anyone about the situation.

"Not only did he want money, he wanted to physically come kidnap me as well. So he was trying to make arrangements when we were finally able to locate her. But that was one of my greatest concerns, was how this was going to be used to actually physically lure or abduct other people instead of just using it for money."

Her incident is one of many that have entered the spotlight since scammers began leveraging A.I. advancements for their misuse.

ARIZONA MOTHER DESCRIBES AI PHONE SCAM FAKING DAUGHTER'S KIDNAPPING: ‘IT WAS COMPLETELY HER VOICE'

Scammers called Jennifer DeStefano and used AI voice cloning technology to imitate the voice of her daughter. (L: Jon Michael Raasch/Fox News; R: PAU BARRENA/AFP via Getty Images)

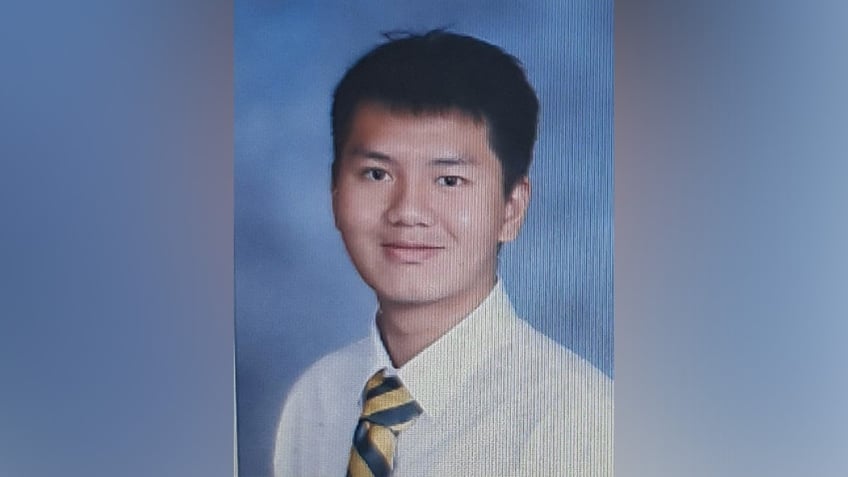

The most notable recent incident involved 17-year-old Chinese exchange student Kai Zhuang, whose family sent approximately $80,000 as ransom money after scammers led them to believe he was kidnapped and in danger. Zhuang was also a victim of the crime, according to local law enforcement who alleged he had disappeared after isolating himself at their direction.

He was found safe last weekend.

HOW SCAMMERS ARE USING YOUR SNAPCHAT AND TIKTOK POSTS IN THEIR AI SCHEMES

DeStefano described the eerie realism used to trick family members into believing their loved ones are at risk.

"It was my daughter's voice, 100%," she said of the call she received.

Riverdale Police issued a missing endangered advisory early Friday morning for 17-year-old Kai Zhuang, but he was later found. (Riverdale Police Department)

"It was the way she cried. It was the way she would talk to me. We had an interactive conversation. The only way I was able to actually locate her was another mom was able to get my husband on the phone. He was able to locate her, get her on the phone with me, and I still didn't believe that she was safe until I spoke to her and confirmed that she was really who I was speaking to because I didn't believe at first… I didn't know who was who because I was so sure of her voice with the kidnappers."

CLICK HERE TO GET THE FOX NEWS APP

Voice cloning scams are becoming increasingly common as experts sound the alarm over their prevalence, as well as other falsifications such as deepfakes and other sophisticated attacks.

Taylor Penley is an associate editor with Fox News.