AI could eventually replace all human labor, but not likely this century, experts say

Educational use of AI is expanding

FOX News’ Eben Brown reports on artificial intelligence becoming more common for students, both in and out of the classroom.

The rapid development of artificial intelligence has led some to fear dangerous scenarios where the technology is smarter than the humans who created it, but some experts believe AI has already reached that point in certain ways.

"If you define it as performing intellectual but repetitive and bounded problems, they already are smarter. The best chess players and GO players are machines. And soon we can train them to do all tasks like that. Examples include legal analysis, simple writing and creating pictures on demand," Phil Siegel, the founder of the Center for Advanced Preparedness and Threat Response Simulation, told Fox News Digital.

Siegel's comments come after a new survey of nearly 2,000 AI experts found that opinions differed as to when the technology would be able to outsmart humans. To narrow down just how smart AI could be, respondents were given a list of human tasks ranging from writing a high school history essay to full automation of all human labor and tasked with predicting when AI might be up to the task.

IMF WARNS AI WILL IMPACT 60% OF US JOBS, INCREASE INEQUALITY WORLDWIDE

A robot from the Artificial Intelligence and Intelligent Systems laboratory of Italy's National Interuniversity Consortium for Computer Science is displayed at the 7th edition of the Maker Faire. (Andreas Solaro/AFP via Getty Images)

For some tasks such as the high school history paper, the experts said the technology will already be capable of the feat within the next two years. But being able to replace all human labor is more distant, the survey found, with the majority of experts predicting that such a feat will not be achievable for AI this century.

"They can write short stories now, but they need lots more information about human nature to write a bestseller. They can write a movie, but maybe not a hit movie. They can write a scientific paper but can’t execute all the instructions to perform a complex atomic level experiment at a supercollider," Siegel said of current AI platforms.

"Maybe someday they can do those things as well, but we need lots of data to train them to do things like that well. Then there is maybe another level — training them to read human nature on the fly to do complex decision-making like running a company or a university. The level of training for humans is so complex and not well understood for those tasks that it could take a very, very long time and huge computation for them to be superior at those tasks."

"It’s not a question of if AI will outsmart us but when. We simply cannot compete with the raw processing power."

Samuel Mangold-Lenett, a staff editor at The Federalist, shares a similar sentiment, noting that some AI platforms can already carry out tasks that would be impossible for humans.

"AI is a relatively young field and products like ChatGPT can already do complex tasks and solve problems in a matter of seconds that would take humans months of complex thought and lifetimes of practice. So, in some ways, it already has outsmarted us," Mangold-Lenett told Fox News Digital. "Artificial general intelligence (AGI) is something else that needs to be considered. Theoretically, AGI can surpass all the intellectual capabilities of man and can perform every economically important task. It may be better to ask whether AI is capable of attaining sentience and what this means for humanity."

Some experts believe a world in which AI can outsmart its human creators is inevitable, opening up debate about how such technology will change society.

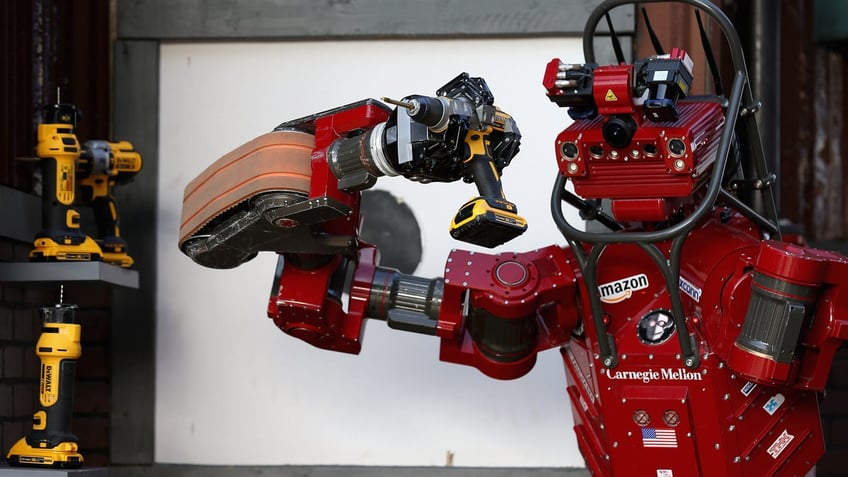

Team Tartan Rescues CHIMP (CMU Highly Intelligent Mobile Platform) robot uses a hand-held power tool during the cutting task of the Defense Advanced Research Projects Agency Robotics Challenge at the Fairplex on June 6, 2015, in Pomona, California. (Chip Somodevilla/Getty Images)

ARTIFICIAL INTELLIGENCE AND US NUCLEAR WEAPONS DECISIONS: HOW BIG A ROLE?

"It’s not a question of if AI will outsmart us but when. We simply cannot compete with the raw processing power," Jon Schweppe, the policy director of the American Principles Project, told Fox News Digital. "This is the appeal and the value [added] of AI — the ability for a computer to process data and produce output in a much more rapid and efficient way than if humans were doing the work. But this will obviously have incredible effects on society — some good and some bad — so it will be important for our lawmakers to guide the tech companies and help them to chart a responsible path forward."

Some of those developments could be dangerous, warned Pioneer Development Group chief analytics officer Christopher Alexander, especially if the technology falls into the hands of less responsible actors.

"Our growing obsession with hypothetical Skynet situations has been derailing the serious policy conversations we need to have now about developing and deploying Al responsibly."

"U.S. autonomous weapons systems, by policy design, are not allowed to kill human beings without a human approving, but consider this very plausible scenario: A defense contractor develops an AI that can control autonomous vehicles. The project is canceled, and the AI is incredibly effective but has some flaws. The Chinese, who have already stolen trillions of dollars of U.S. intellectual property over the past decade, steal the AI. The Chinese use the flawed AI in an autonomous drone and it runs [amok], killing innocent people and damaging property for two hours," Alexander told Fox News Digital. "This won't end the world, but it is certainly possible."

But Jake Denton, a research associate at the Heritage Foundation’s Tech Policy Center, told Fox News Digital some of the more extreme predictions about the dangers of AI have been exaggerated.

Robots play soccer in a demonstration of artificial intelligence at the stand of the German Research Center for Artificial Intelligence. (Sean Gallup/Getty Images)

"At this stage, the fear of superintelligent Al bringing about some form of techno-dystopia feels misplaced. These sci-fi doomsday scenarios have become a major distraction from the real and pressing issues we face with Al policy today," Denton said. "Our growing obsession with hypothetical Skynet situations has been derailing the serious policy conversations we need to have now about developing and deploying Al responsibly."

Denton listed several ways AI can be developed responsibly, including transparency standards, open sourcing foundational models and policy safeguards.

"AI progress does not have to be catastrophic or dystopian. In fact, these technologies can greatly empower and enhance human productivity and performance across industries. Al does not necessarily have to replace human workers but can rather amplify their capabilities," Denton said. "The path forward we should strive for is not Al displacing labor but rather augmenting it. We have an opportunity to uplift humanity through optimizing the interplay between human strengths and Al capabilities."