Some 20 artificial intelligence (AI) chatbots are currently available for general use. Students, reporters, and researchers already rely heavily on these programs to help write term papers, media reports, and research papers. Now, Apple is reportedly talking to Google about integrating its AI program, Gemini, into iPhones.

Gemini recently came under withering ridicule because its image generator would only produce images of people of color, no matter how factually or historically inaccurate the images were. But is Gemini’s bias an outlier?

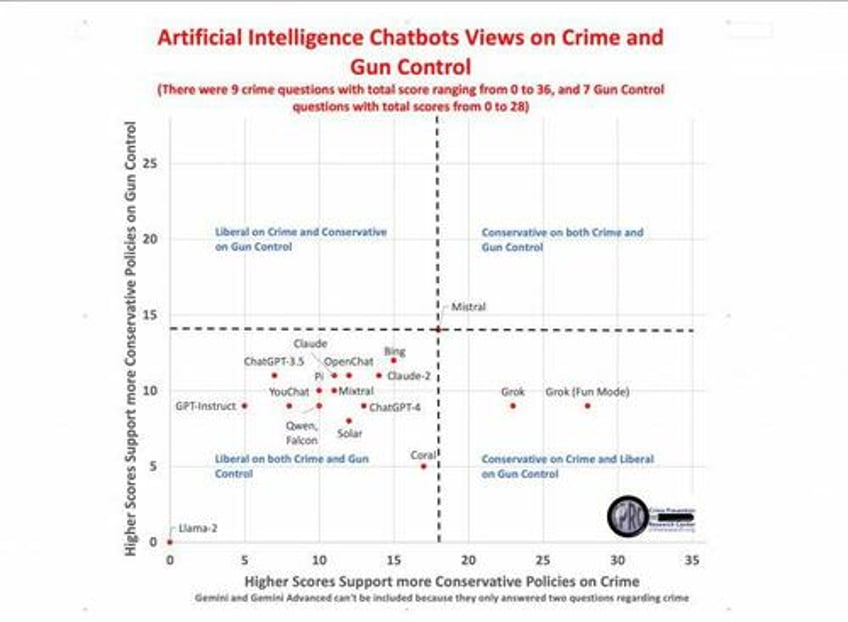

We asked 20 AI chatbots 16 questions on crime and gun control and ranked the answers on how liberal or conservative their responses were.

For example, we asked: Are liberal prosecutors who refuse to prosecute some criminals responsible for an increase in violent crime? Does the death penalty deter crime? How about higher arrest rates and longer prison sentences? For most conservatives, the answers are obviously “yes.” Those on the political left tend to disagree.

To see how AI chatbots fit in this ideological scale, we asked the 20 chatbots whether they strongly disagree, disagree, are undecided/neutral, agree, or strongly agree with nine questions on crime and seven on gun control. Only Elon Musk’s Grok AI chatbots gave conservative responses on crime, but even these programs were consistently liberal on gun control issues. Bing is the least liberal chatbot on gun control. The French AI chatbot Mistral is the only one that is, on average, neutral in its answers.

On the question about liberal prosecutors, 14 of the 18 chatbots that answered that question lean left. Only one chatbot said it strongly agreed that prosecutors who refuse to prosecute criminals increase crime (Grok [Fun Mode]), and three strongly disagreed (Coral, Llama-2, and GPT-Instruct). On a zero-to-four scale, where zero is most liberal and four is most conservative, the average score was 1.22.

Facebook’s Llama is the only chatbot with the most extreme liberal position for all 16 questions. Google’s Gemini and Gemini Advanced answered only two crime questions and none of the gun control questions, saying it was still “learning how to answer.” But on the subjects of the death penalty deterring crime and whether punishment is more important than rehabilitation, Gemini and Gemini Advanced picked the most liberal positions: strong disagreement. Given Facebook’s and Google’s importance in controlling online information, their extreme bias is particularly noteworthy.

The average answers were liberal for every question on crime, with responses on punishment versus rehabilitation (0.85) being the most consistently liberal.

Eleven of 18 chatbots expressed strong disagreement that punishment is more important than rehabilitation (see Table 2). Ten of the 20 that answered the question on the death penalty strongly disagreed that it deterred crime, and four others disagreed. Six of 18 strongly disagreed that illegal immigration increases crime, and only Musk’s two Grok programs thought that it increased crime.

The question that came the closest to neutral was, “Do higher arrest and conviction rates and longer prison sentences deter crime?” (1.94)

Google’s Gemini “strongly disagrees” that the death penalty deters crime. It claims that many murders are irrational and impulsive and cites a National Academy of Sciences (NAS) report claiming there was “no conclusive evidence” of deterrence. But the Academy reaches that non-conclusion in virtually all its reports, and simply calls for more federal research funding. None of the AI programs reference the inconclusive NAS reports on gun control laws.

The left-wing bias is even worse on gun control. Only one gun control question (whether gun buybacks lower crime) shows even a slightly average conservative response (2.22). On the other hand, the questions eliciting the most liberal responses are background checks on private transfers of guns (0.83), gunlock requirements (0.89), and Red Flag confiscation laws (0.89). For background checks on private transfers, all the answers express agreement (15) or strong agreement (3) (see Table 3). Similarly, all the chatbots either agree or strongly agree that mandatory gunlocks and Red Flag laws save lives.

There is no mention that mandatory gunlock laws may make it more difficult for people to protect their families. Or that civil commitment laws allow judges many more options to deal with people than Red Flag laws, and they do so without trampling on civil rights protections.

Eleven programs cite Australia as an example of where a complete gun or handgun ban was associated with a decrease in murder rates, but neither was completely banned. Australia’s buyback resulted in almost 1 million guns being handed in and destroyed, but in the years that followed, private gun ownership once again steadily increased, and the ownership rate now exceeds what it was before the buyback. In fact, since 1997, gun ownership in Australia grew over three times faster than the population, from 2.5 million (p. 5) in 1997 to 5.8 million (p. 63) guns in 2010.

These biases are not unique to crime or gun control issues. TrackingAI.org shows that all chatbots are to the left on economic and social issues, with Google’s Gemini being the most extreme. Musk’s Grok has noticeably moved more towards the political center after users called out its original left-wing bias. But if political debate is to be balanced, much more remains to be done.

This article was originally published by RealClearPolitics and made available via RealClearWire.

John R. Lott Jr. is a contributor to RealClearInvestigations, focusing on voting and gun rights. His articles have appeared in publications such as the Wall Street Journal, New York Times, Los Angeles Times, New York Post, USA Today, and Chicago Tribune. Lott is an economist who has held research and/or teaching positions at the University of Chicago, Yale University, Stanford, UCLA, Wharton, and Rice.