British chat forums are shutting themselves down rather than face regulatory burdens recently applied to internet policing laws.

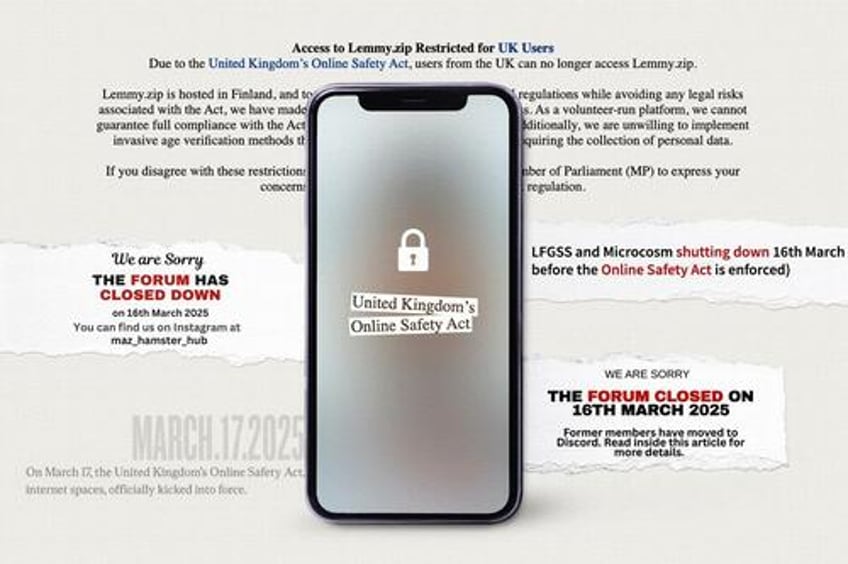

On March 17, the United Kingdom’s Online Safety Act, a law that regulates internet spaces, officially kicked into force.

The law means that online platforms must immediately start putting in place measures to protect people in the UK from criminal activity with far-reaching implications for the internet.

However, for some forums—from cyclists, hobbyists, and hamster owners, to divorced father support and more—the regulatory pressure is proving too much, and its myriad of rules are causing chat forums that have been operating for decades, in some cases, to call it a day.

Conservative Peer Lord Daniel Moylan told The Epoch Times by email that “common sense suggests the sites least likely to survive will be hobby sites, community sites, and the like.”

‘Small But Risky Services’

The Act—which was celebrated as the world-first online safety law—was designed to ensure that tech companies take more responsibility for the safety of their users.

For example, social media platforms, including user-to-user service providers, have the duty to proactively police harmful illegal content such as revenge and extreme pornography, sex trafficking, harassment, coercive or controlling behavior, and cyberstalking.

But what the government calls “small but risky services” which are often forums, have to submit illegal harms risk assessments to the Online Safety Act’s regulator, Ofcom, by March 31.

Ofcom first published its illegal harm codes of practice and guidance in December 2024 and had given providers three months to carry out the assignment.

It was given powers under the law and warned that those who fail to do so may face enforcement action.

“We have strong enforcement powers at our disposal, including being able to issue fines of up to 10 percent of turnover or £18 million ($23 million)—whichever is greater—or to apply to a court to block a site in the UK in the most serious cases,” said Ofcom.

Some of the rules for owners of these sites—which are often operated by individuals —include keeping written records of their risk assessments, detailing levels of risk, and assessing the “nature and severity of potential harm to individuals.”

While terrorism and child sexual exploitation may be more straightforward to assess and mitigate, offenses such as coercive and controlling behavior and hate offenses are more challenging to manage with forums that have thousands of users.

‘No Way To Dodge It’

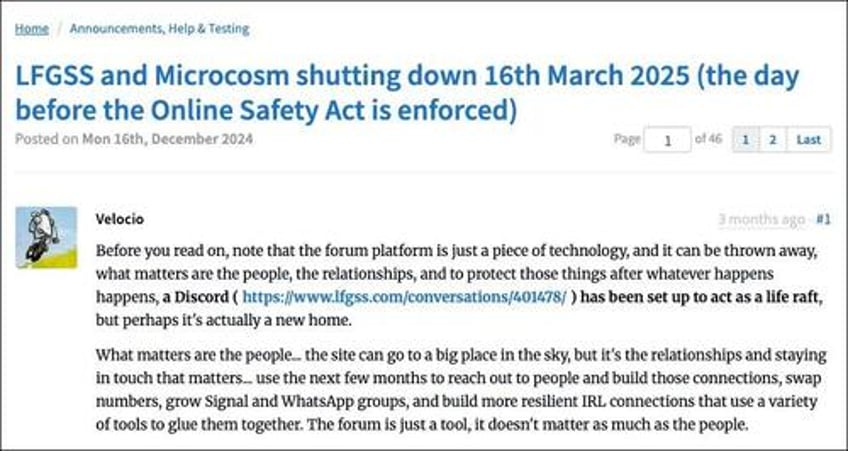

LFGSS (London Fixed Gear and Single Speed), a popular cycling forum and resource for nearly two decades, shut down in December.

“We’re done ... we fall firmly into scope, and I have no way to dodge it,” the site said, adding that the law “makes the site owner liable for everything that is said by anyone on the site they operate.”

“The act is too broad, and it doesn’t matter that there’s never been an instance of any of the proclaimed things that this act protects adults, children, and vulnerable people from ... the very broad language and the fact that I’m based in the UK means we’re covered,” it said.

Dee Kitchen, the Microcosm forum software developer that was used to power 300 online communities including LFGSS, said he deleted them all on March 16, a day before the law kicked in.

More recently the Hamster Forum shut down.

On March 16, it wrote that while the forum has “always been perfectly safe, we were unable to meet the compliance.”

The resource forum dadswithkids for single dads, and fathers going through divorce or separation—and also teaches how to maintain relationships with their children, also shut down.

UK users are also being blocked from accessing sites hosted abroad.

The hosts of the lemmy.zip forum, hosted in Finland, said to ensure compliance with international regulations while avoiding any legal risks associated with the Act, it has made the difficult decision to block UK access.

“These measures pave the way for a UK-controlled version of the ‘Great Firewall,’ granting the government the ability to block or fine websites at will under broad, undefined, and constantly shifting terms of what is considered ‘harmful’ content,” it said.

‘Not Setting Out to Penalize’

An Ofcom spokesman told The Epoch Times by email: “We’re not setting out to penalize small, low-risk services trying to comply in good faith, and will only take action where it is proportionate and appropriate.”

Read the rest here...