Yann LeCun, a key figure in the development of modern artificial intelligence, is speaking out against what he perceives as overblown predictions and concerns about AI’s capabilities and potential dangers. The Meta scientist calls worried about AI “complete B.S.” while claiming the technology is dumber than a house cat.

The Wall Street Journal reports that Yann LeCun, a prominent figure in the field of artificial intelligence and chief AI scientist at Meta Platforms, is taking a stand against what he sees as exaggerated claims about AI’s current and near-future capabilities. As one of the recipients of the 2019 A.M. Turing Award, often referred to as the “Nobel Prize of Computing,” LeCun’s perspective carries significant weight in the AI community.

LeCun’s position contrasts sharply with some of his peers and other tech industry leaders who have been vocal about the rapid advancement and potential risks of AI. For instance, OpenAI’s Sam Altman has suggested that artificial general intelligence (AGI) could be achieved within “a few thousand days,” while Elon Musk has projected it could happen as soon as 2026.

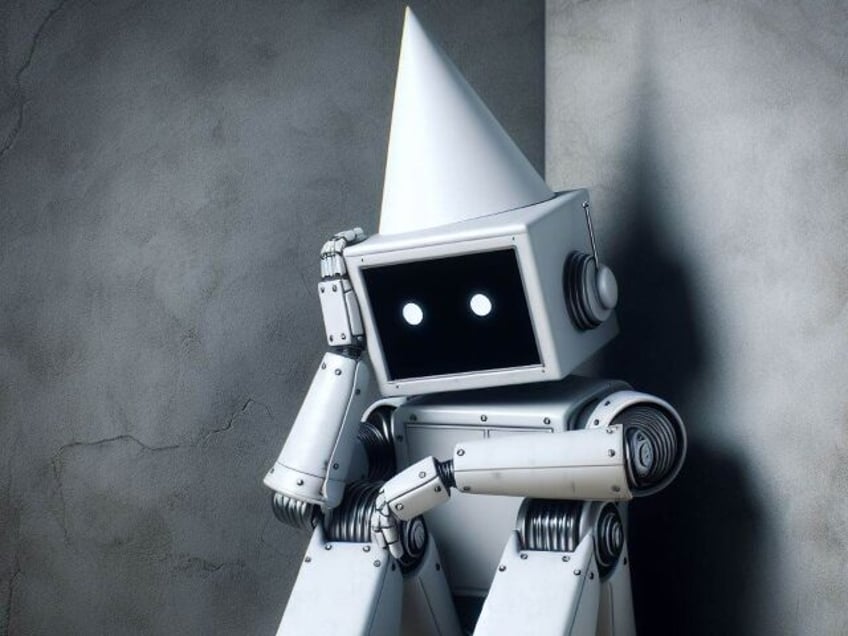

However, LeCun maintains that current AI models, despite their usefulness, are far from rivaling the intelligence of even household pets. When asked about concerns that AI might soon become powerful enough to pose a threat to humanity, LeCun dismisses such notions, stating, “You’re going to have to pardon my French, but that’s complete B.S.”

LeCun argues that today’s AI systems, including large language models (LLMs) that power chatbots like ChatGPT, lack fundamental aspects of intelligence such as common sense, persistent memory, and the ability to reason or plan. He likens the current state of AI to being less capable than a house cat, which possesses a mental model of the physical world and some reasoning ability.

The AI pioneer’s skepticism extends to the idea that simply scaling up current AI models with more data and computing power will lead to AGI. Instead, LeCun believes that a fundamentally different approach to AI design is necessary to achieve human-level intelligence. He suggests that future breakthroughs may come from research into AI systems that learn from the real world in ways more analogous to how animals and humans develop intelligence.

At Meta, where LeCun oversees one of the world’s best-funded AI research organizations, work is ongoing to develop AI that can digest and learn from video of the real world. This approach aims to create models that build world understanding in a manner similar to how infants learn by observing their environment.

While acknowledging the significant impact of AI on companies like Meta, where it is integral to functions such as real-time translation and content moderation, LeCun remains cautious about overstating AI’s current capabilities. He points out that while large language models are adept at predicting the next word in a sequence and have vast memory capacity, this doesn’t equate to true intelligence or reasoning ability.

LeCun’s stance has led to public disagreements with other AI experts, including his fellow Turing Award winners Geoffrey Hinton and Yoshua Bengio, who have expressed concerns about the potential dangers of advanced AI systems.

As Breitbart News previously reported, Hinton argues that AI may try to overtake humanity:

Hinton does not shy away from shedding light on the darker aspects and uncertainties surrounding AI. He candidly expresses, “We’re entering a period of great uncertainty where we’re dealing with things we’ve never done before. And normally the first time you deal with something totally novel, you get it wrong. And we can’t afford to get it wrong with these things.”

One of the most pressing concerns Hinton raises relates to the autonomy of AI systems, particularly their potential ability to write and modify their own computer code. This, he suggests, is an area where control may slip from human hands, and the consequences of such a scenario are not fully predictable. Furthermore, as AI systems continue to absorb information from various sources, they become increasingly adept at manipulating human behaviors and decisions. Hinton forewarns, “I think in five years time it may well be able to reason better than us.”

Read more at the Wall Street Journal here.

Lucas Nolan is a reporter for Breitbart News covering issues of free speech and online censorship.