European regulators have launched an investigation into Mark Zuckerberg’s Meta, escalating pressure on the tech giant to bolster its safeguards against misinformation and foreign interference on Facebook, Instagram, and Whatsapp ahead of pivotal EU elections.

The New York Times reports that the European Union’s executive branch, the European Commission, has Zuckerberg’s Meta in its crosshairs over concerns that the company’s Facebook and Instagram platforms lack sufficient protections against the spread of misleading ads, AI-generated deepfakes, and other deceptive content aimed at amplifying political divisions and swaying elections.

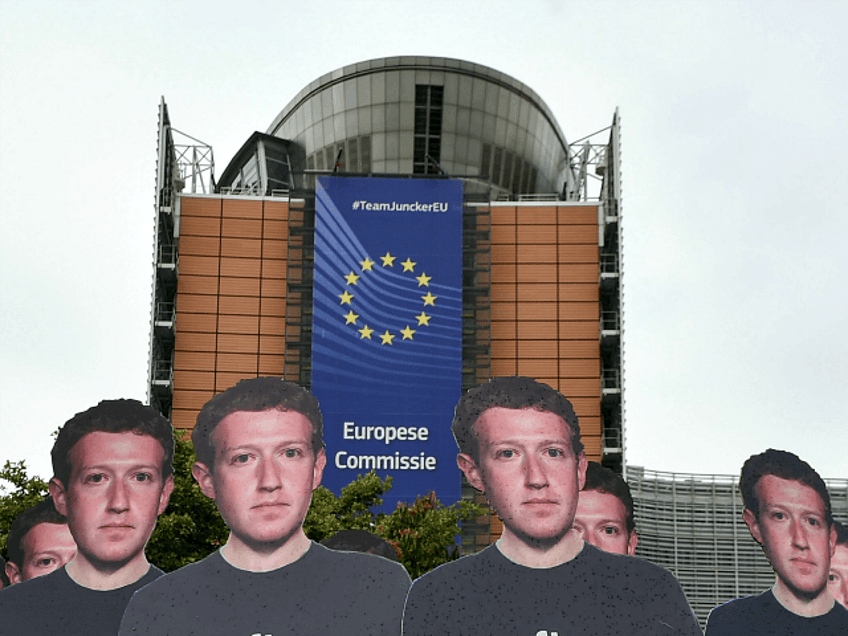

JOHN THYS/AFP/Getty Images

In announcing the formal inquiry on Tuesday, EU officials made it clear they intend to compel Meta to deploy more aggressive measures to combat malicious actors seeking to undermine the integrity of the upcoming European Parliament elections from June 6 to June 9.

The investigation highlights the EU’s firm stance on reining in big tech’s perceived failures in content moderation – an approach starkly contrasting with the US, where free speech protections limit government oversight of online discourse. Under the recently enacted Digital Services Act, European authorities now wield formidable powers to scrutinize and penalize major platforms like Meta.

Ursula von der Leyen, President of the European Commission, struck a resolute tone, stating, “Big digital platforms must live up to their obligations to put enough resources into this, and today’s decision shows that we are serious about compliance.”

At the heart of the investigation lie concerns over deficiencies in Meta’s content moderation systems to identify and remove harmful content from malicious actors. Regulators cited a recent report from AI Forensics, a European civil society group, which exposed a Russian disinformation network purchasing misleading ads through fake accounts on Meta’s platforms.

Furthermore, officials allege that Meta appears to be suppressing the visibility of certain political content with potentially detrimental effects on the electoral process, underscoring demands for greater transparency around how such content propagates.

While defending its policies and asserting proactive efforts to curb disinformation, Meta affirmed its readiness to cooperate with the European Commission, stating, “We have a well-established process for identifying and mitigating risks on our platforms. We look forward to continuing our cooperation with the European Commission and providing them with further details of this work.”

The inquiry marks the latest salvo from EU regulators invoking the Digital Services Act, with similar probes underway into TikTok and Twitter (now known as X). Potential penalties for violators are severe, with the Commission empowered to levy fines up to six percent of a company’s global revenue and conduct office raids to gather evidence.

Read more at the New York Times here.

Lucas Nolan is a reporter for Breitbart News covering issues of free speech and online censorship.