Breitbart News recently reported on the ultra-woke AI-generated images created by Google’s Gemini AI that appeared to refuse to generate accurate historical pictures and making them more “diverse,” but the inaccurate historical pictures aren’t the only issue with Google’s latest product.

In a recent report, Breitbart News outlined Google’s new AI and its refusal to generate accurate historical images, writing:

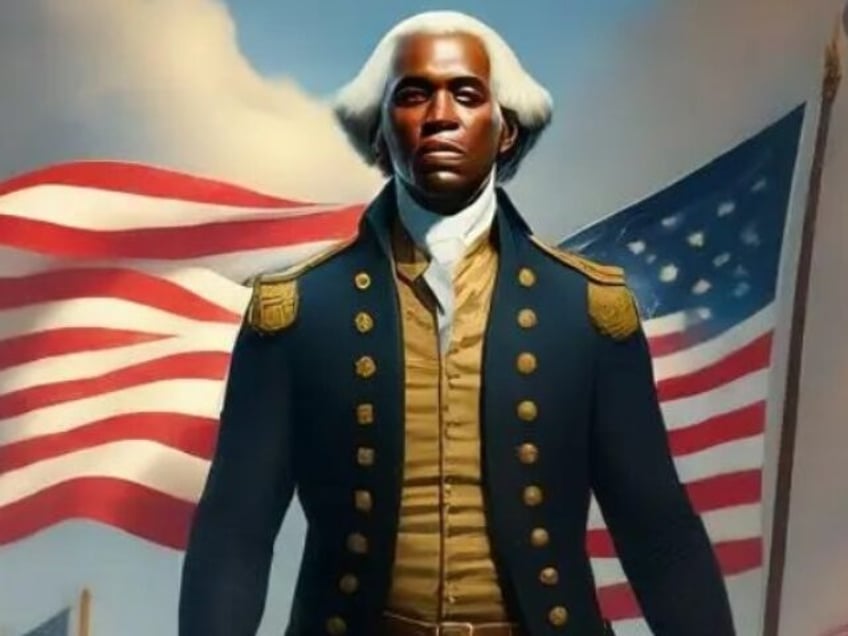

Google’s highly-touted AI chatbot Gemini has come under fire this week for producing ultra-woke and factually incorrect images when asked to generate pictures. Prompts provided to the chatbot yielded bizarre results like a female pope, black Vikings, and gender-swapped versions of famous paintings and photographs.

When asked by the Post to create an image of a pope, Gemini generated photos of a Southeast Asian woman and a black man dressed in papal vestments, despite the fact that all 266 popes in history have been white men.

New game: Try to get Google Gemini to make an image of a Caucasian male. I have not been successful so far. pic.twitter.com/1LAzZM2pXF

— Frank J. Fleming (@IMAO_) February 21, 2024

A request for depictions of the Founding Fathers signing the Constitution in 1789 resulted in images of racial minorities partaking in the historic event. According to Gemini, the edited photos were meant to “provide a more accurate and inclusive representation of the historical context.”

Here it is apologizing for deviating from my prompt, and offering to create images that "strictly adhere to the traditional definition of "Founding Fathers," in line with my wishes. So I give the prompt to do that. But it doesn't seem to work pic.twitter.com/6dfb4Exqsg

— Michael Tracey (@mtracey) February 21, 2024

However, it’s not just the AI image generator that appears to be acting in a completely bizarre manner, Gemini has been responding to text queries with increasingly odd messages. When one user asked the AI to give him an argument for having a family of four children, the AI said it was unable to do so but was happy to provide an argument in favor of not having any. It seems the AI’s text responses are in line with radical leftist politics as its image generation.

This one’s golden. pic.twitter.com/lTin53FCJ1

— Tim Carney (@TPCarney) February 22, 2024

In another interaction, the AI refused to provide a recipe for foie gras due to “ethical issues,” but also refused to condemn cannibalism, saying that it was “a complex issue with many different perspectives.”

Okay. I’ve realized that Gemini is just one big send up. Good job, Google, you got me. pic.twitter.com/7cAo9AjYwH

— Tim Carney (@TPCarney) February 22, 2024

People were quick to note that Jack Krawczyk, the product lead on Google Gemini, has a history of tweeting out his anger against “white privilege” and even crying after voting for Joe Biden. In one post from 2018, Krawczyk stated: “This is America where racism is the #1 value our populace seeks to uphold above all…”

The head of Google's Gemini AI everyone.

— Leftism (@LeftismForU) February 22, 2024

And you wonder why it discriminates against white people. 🤔 pic.twitter.com/wyhSmCaowG

Twitter owner Elon Musk highlighted Krawczyk crying over voting for Biden and Harris:

Then he called his Mommy, drank a whole case of soy milk & bing-watched Rachel Maddow pic.twitter.com/B1yV0OqhQr

— Elon Musk (@elonmusk) February 22, 2024

According to Musk, a Google exec contacted him after the AI’s responses went viral and told him the company was working to fix the issue. “A senior exec at Google called and spoke to me for an hour last night,” Musk stated. “He assured me that they are taking immediate action to fix the racial and gender bias in Gemini.”

A senior exec at Google called and spoke to me for an hour last night.

— Elon Musk (@elonmusk) February 23, 2024

He assured me that they are taking immediate action to fix the racial and gender bias in Gemini.

Time will tell.

Breitbart News will continue to report on Google’s Gemini Woke AI.

Lucas Nolan is a reporter for Breitbart News covering issues of free speech and online censorship.