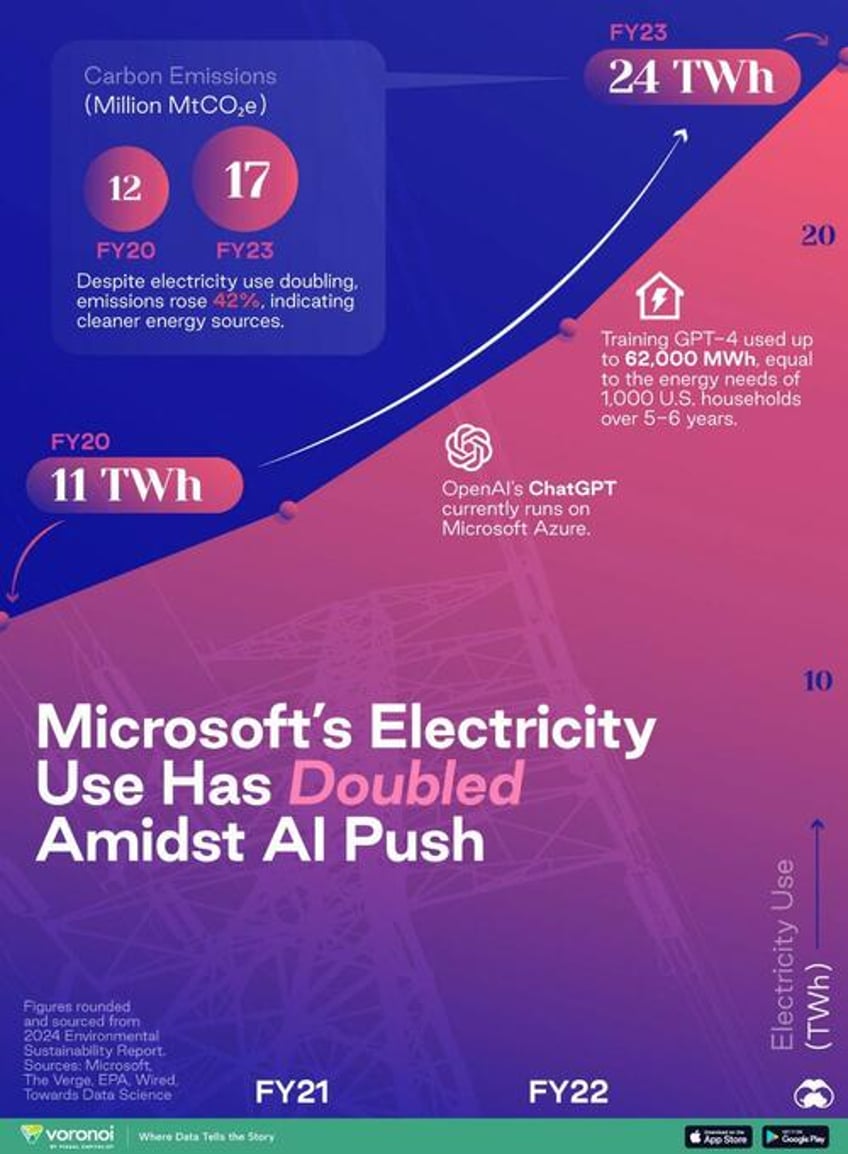

Big Tech’s AI arms race has a significant energy cost. For example, a study found that training OpenAI’s GPT-4 used up to 62,000 megawatt-hours, equal to the energy needs of 1,000 U.S. households over 5-6 years.

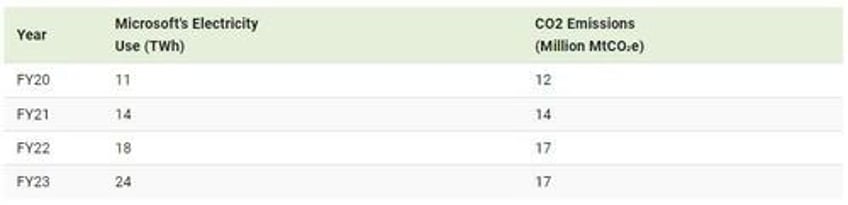

And, as Visual Capitalist's Pallavi Rao details below, this can be seen when tracking Microsoft’s electricity use (in terawatt-hours) and related carbon emissions (in million metric tons of CO₂e) in the last four years.

Data is sourced from the company’s 2024 Sustainability Report, and covers FY 2020–23. Microsoft’s financial year runs from July 1st to June 30th.

AI Push is Putting Pressure on Microsoft’s Emissions Targets

In just four years, Microsoft’s electricity consumption has more than doubled from 11 TWh to 24 TWh. For context, the entire country of Jordan (population: 11 million) uses 20 TWh of electricity in a given year.

This electricity usage jump is accompanied by a 42% increase in total carbon emissions—indicating a relative growing share of renewable energy sources.

Note: Figures rounded.

Both trends coincide with Microsoft Azure’s use to train and run AI models, of which OpenAI’s ChatGPT is the most prominent.

In fact, Microsoft spent “hundreds of millions of dollars” to develop a super computer just for ChatGPT, which involved linking thousands of Nvidia GPUs.

Training AI models requires a lot of compute. The data centers built to provide said compute are more power hungry than those providing traditional email or website services.

In fact, just the construction of Microsoft’s data centers has accounted for 30% of the emissions increase between 2020–23.

This emissions increase comes after Microsoft stated ambitions of becoming carbon negative by 2030. Meanwhile, Google is in a similar quagmire. It, too, aimed for carbon neutrality by 2030. Instead, its emissions have risen 48% since 2019.