On Monday night, Elon Musk's artificial intelligence startup, xAI, unveiled its latest model, Grok3, with the billionaire touting it as the "smartest AI on Earth," and in an AI evaluation, achieved a record-breaking score, outperforming models from OpenAI and China's DeepSeek. Remember, Musk is simultaneously juggling DOGE and several ventures, including autonomous vehicles, space exploration, neurotechnology, robotics, tunneling, and his social media platform, X.

Musk explained the mission of Grok:

"The mission of xAI and Grok is to understand the universe. We want to answer the biggest questions: Where are the aliens? What's the meaning of life? How does the universe end? To do that, we must rigorously pursue truth."

Elon Musk

— Tesla Owners Silicon Valley (@teslaownersSV) February 18, 2025

“The mission of xAI and Grok is to understand the universe.

We want to answer the biggest questions: Where are the aliens? What’s the meaning of life? How does the universe end?

To do that, we must rigorously pursue truth” pic.twitter.com/rgDQStnE3v

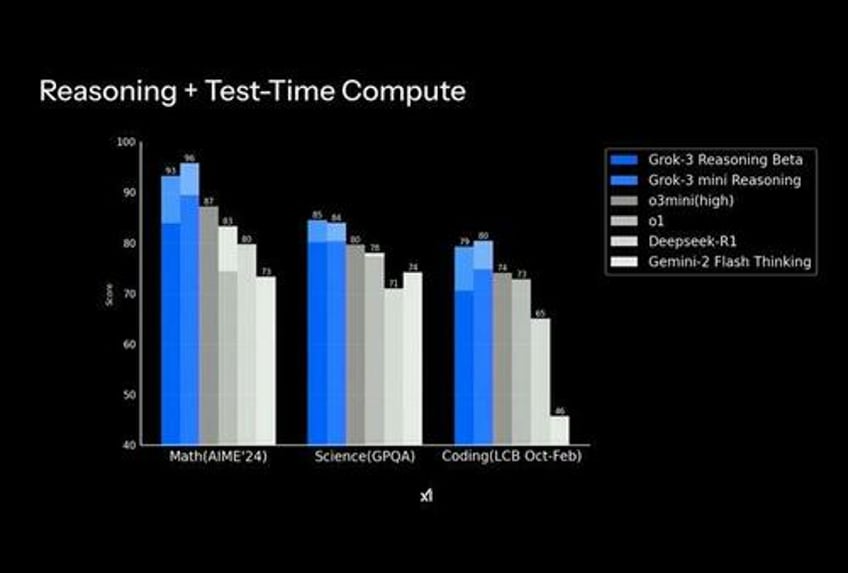

The xAI team revealed that Grok3 outperformed Alphabet's Google Gemini, DeepSeek's V3 model, Anthropic's Claude, and OpenAI's GPT-4o across math, science, and coding benchmarks.

Musk noted that Grok3 has "more than 10 times" the computing power of its predecessor and completed pre-training earlier this year.

The LLM blind test by LMArena found Grok3 achieved a record milestone of 1400 score.

"And it's still climbing. So we have to keep updating it. It's 1400 and climbing," Musk said.

Musk said, "We're continually improving the models every day, and literally within 24 hours, you'll see improvements."

BREAKING: @xAI early version of Grok-3 (codename "chocolate") is now #1 in Arena! 🏆

— lmarena.ai (formerly lmsys.org) (@lmarena_ai) February 18, 2025

Grok-3 is:

- First-ever model to break 1400 score!

- #1 across all categories, a milestone that keeps getting harder to achieve

Huge congratulations to @xAI on this milestone! View thread 🧵… https://t.co/p8z8lccNd5 pic.twitter.com/hShGy8ZN1o

"The new chatbot appears to put Grok ahead of OpenAI's latest ChatGPT and ramps up an increasingly bitter rivalry between the two companies," Bloomberg noted. Just last week, Musk offered to purchase OpenAI's nonprofit arm for $97.4 billion. However, the offer was quickly rejected by OpenAI CEO Sam Altman.

Andrej Karpathy, former Director of AI at Tesla, wrote a lengthy post on X about his experience with early access to Grok3.

Here's the summary of the post:

Thinking Capability: Grok 3 has an advanced thinking model on par with top OpenAI models, successfully handling complex tasks like creating a Settlers of Catan game webpage with dynamic hex grids. However, it failed at solving an emoji mystery involving Unicode variation selectors.

Tic Tac Toe: Grok 3 could solve simple Tic Tac Toe puzzles but struggled with generating "tricky" boards, similar to OpenAI's o1-pro.

Knowledge Retrieval: When tested with knowledge-based questions related to the GPT-2 paper, Grok 3 performed well, including estimating the computational cost of training GPT-2 without internet searching, which other models like o1-pro failed at.

Riemann Hypothesis: Grok 3 attempted to solve the Riemann hypothesis, showing initiative by not giving up on challenging problems, unlike some other models.

DeepSearch: This feature combines research capabilities with thinking, providing high-quality responses to various lookup questions. However, it had issues with referencing X as a source and occasionally hallucinated non-existent URLs.

Humor: Grok 3's humor capability did not show improvement, failing to generate novel or sophisticated jokes, which is a common challenge for LLMs.

Ethical Sensitivity: The model was overly sensitive to complex ethical issues, avoiding questions that might involve ethical dilemmas.

SVG Generation: Grok 3 failed at generating an SVG of a pelican riding a bicycle, a test of spatial layout abilities, though it performed better than some but not as well as Claude.

Karpathy concluded that Grok 3, with its thinking capabilities, is around the state-of-the-art level, slightly outperforming models like DeepSeek-R1 and Gemini 2.0 Flash Thinking, considering xAI started from scratch about a year ago - an unprecedented achievement.

I was given early access to Grok 3 earlier today, making me I think one of the first few who could run a quick vibe check.

— Andrej Karpathy (@karpathy) February 18, 2025

Thinking

✅ First, Grok 3 clearly has an around state of the art thinking model ("Think" button) and did great out of the box on my Settler's of Catan… pic.twitter.com/qIrUAN1IfD

Watch: Full presentation and demo of xAI's latest model

GROK 3: SOLVING PHYSICS, GAMES, AND THE UNIVERSE

— Mario Nawfal (@MarioNawfal) February 18, 2025

Full presentation and demo of xAI's latest model

0:00 xAI's mission: Understand the universe

1:20 Team presentation

2:01 Grok means to profoundly understand

2:29 From Grok 2 to Grok 3

6:30 Grok 3 benchmarks

9:07 Grok 3 improves… https://t.co/7qbB6O16Yb pic.twitter.com/BomGwAOa1I

Musk: "All you need to know to understand which company will win a technology competition is look at the first and second derivatives of the rate of innovation."

All you need to know to understand which company will win a technology competition is look at the first and second derivatives of the rate of innovation https://t.co/rImcrpzfeY

— Elon Musk (@elonmusk) February 18, 2025

XAI has a "colossus supercomputer" powered by a cluster of 100,000 advanced Nvidia GPUs for AI training in Memphis, Tennessee.