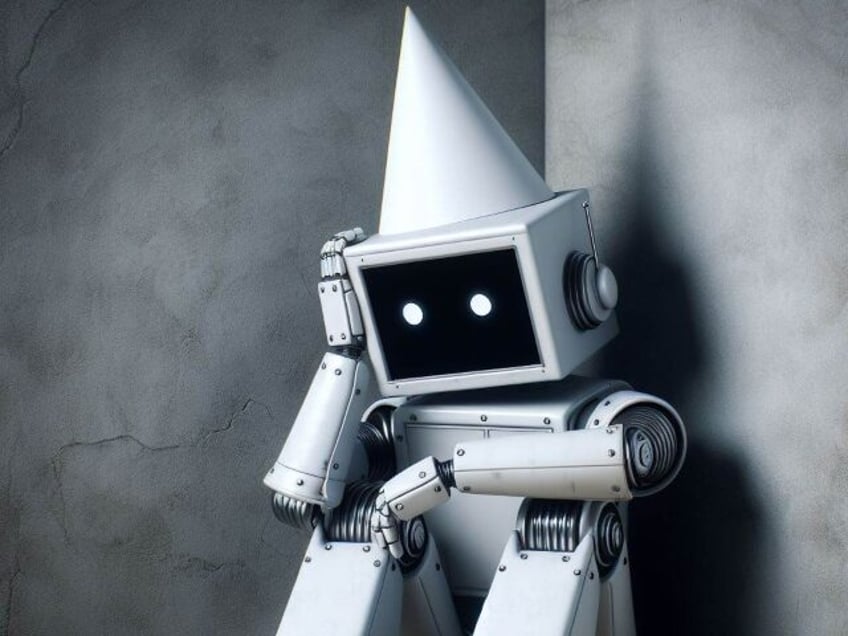

A new study from Columbia Journalism Review’s Tow Center for Digital Journalism has uncovered serious accuracy issues with generative AI models used for news searches. According to the study, AI search engines have a startling error rate of 60 percent when queried about the news.

Ars Technica reports that the research tested eight AI-driven search tools equipped with live search functionality and discovered that the AI models incorrectly answered more than 60 percent of queries about news sources. This is particularly concerning given that roughly 1 in 4 Americans now use AI models as alternatives to traditional search engines, according to the report by researchers Klaudia Jaźwińska and Aisvarya Chandrasekar.

Error rates varied significantly among the platforms tested. Perplexity provided incorrect information in 37 percent of queries, while ChatGPT Search was wrong 67 percent of the time. Elon Musk’s Grok 3 had the highest error rate at 94 percent. For the study, researchers fed direct excerpts from real news articles to the AI models and asked each one to identify the headline, original publisher, publication date, and URL. In total, 1,600 queries were run across the eight generative search tools.

The study found that rather than declining to respond when they lacked reliable information, the AI models often provided “confabulations” — plausible-sounding but incorrect or speculative answers. This behavior was seen across all models tested. Surprisingly, paid premium versions like Perplexity Pro ($20/month) and Grok 3 premium ($40/month) confidently delivered incorrect responses even more frequently than the free versions, though they did answer more total prompts correctly.

Evidence also emerged suggesting some AI tools ignored publishers’ Robot Exclusion Protocol settings meant to prevent unauthorized access. For example, Perplexity’s free version correctly identified all 10 excerpts from paywalled National Geographic content, despite the publisher explicitly blocking Perplexity’s web crawlers.

Even when the AI search tools did provide citations, they frequently directed users to syndicated versions on platforms like Yahoo News rather than to the original publisher sites — even in cases where publishers had formal licensing deals with the AI companies. URL fabrication was another major issue, with over half of citations from Google’s Gemini and Grok 3 leading to fabricated or broken URLs that resulted in error pages. 154 out of 200 Grok 3 citations tested led to broken links.

These problems present difficult quandaries for publishers. Blocking AI crawlers could lead to a total loss of attribution, but allowing them enables widespread content reuse without driving traffic back to the publishers’ own sites. Mark Howard, COO of Time magazine, expressed wanting more transparency and control over how Time’s content appears in AI-generated searches. However, he sees room for iterative improvement, stating “Today is the worst that the product will ever be,” and noting the substantial investments being made to refine the tools. Howard also suggested consumers are at fault if they fully trust free AI tools, saying “If anybody as a consumer is right now believing that any of these free products are going to be 100 percent accurate, then shame on them.”

Statements from OpenAI and Microsoft acknowledged receipt of the study’s findings but did not directly address the specific issues raised. OpenAI noted its commitment to supporting publishers through summaries, quotes, clear links and attribution that drive traffic. Microsoft stated that it adheres to Robot Exclusion Protocols and publisher directives.

This latest report expands on previous Tow Center findings from November 2024 that identified similar accuracy problems in how ChatGPT handled news content. The extensive CJR report provides further details on this important and evolving issue at the intersection of AI and online journalism.

Read more at Ars Technica here.

Lucas Nolan is a reporter for Breitbart News covering issues of free speech and online censorship.