A new study claims that Google’s advanced AI chatbot has demonstrated superior diagnostic abilities and empathy compared to board-certified primary-care physicians in simulated medical scenarios.

Nature reports that a recent study claims that Google’s advanced AI chatbot excels in medical diagnostics. Named the Articulate Medical Intelligence Explorer (AMIE), this AI system, based on Google’s large language model (LLM) AI chatbot, has demonstrated an ability to conduct medical interviews and offer diagnoses with an accuracy that the study claims either matches or surpasses that of human doctors.

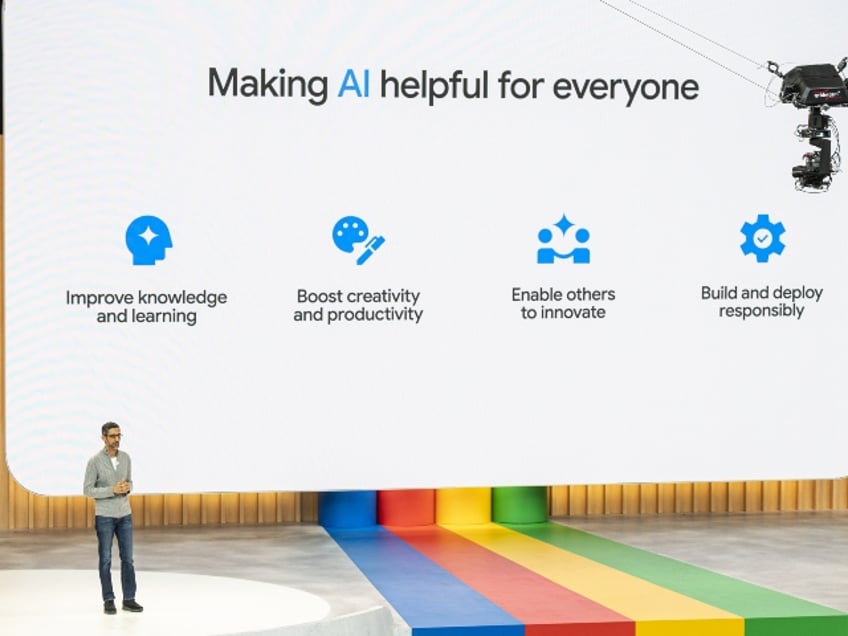

Sundar Pichai, chief executive officer of Alphabet Inc., during the Google I/O Developers Conference in Mountain View, California. Photographer: David Paul Morris/Bloomberg

Alan Karthikesalingam, a clinical research scientist at Google Health in London and a co-author of the study, noted the uniqueness of the Google AI, stating, “To our knowledge, this is the first time that a conversational AI system has ever been designed optimally for diagnostic dialogue and taking the clinical history”. According to the study, the chatbot was more accurate than board-certified primary-care physicians in diagnosing respiratory and cardiovascular conditions, among others, and ranked higher in empathy during medical interviews compared to human doctors.

However, the AMIE chatbot is still in an experimental phase and has been tested only on actors trained to portray people with medical conditions. Karthikesalingam urged caution in interpreting these results, saying, “We want the results to be interpreted with caution and humility”.”

The AI’s training involved a new method to overcome the lack of real-world medical conversations for training data. The Google team initially fine-tuned the LLM with existing datasets such as electronic health records and transcribed medical conversations. To further train the model, the researchers prompted the LLM to role-play as both a patient with a specific condition and an empathetic clinician, aiming to understand the patient’s history and devise potential diagnoses.

In testing the system, researchers used 20 individuals trained as patient impersonators in online text-based consultations with both AMIE and human clinicians. The AI system not only matched but in many cases surpassed the physicians’ diagnostic accuracy. Karthikesalingam notes the inherent advantage of an LLM in this context, explaining that it can “quickly compose long and beautifully structured answers,” allowing it to be consistently considerate without getting tired.

However, Karthikesalingam noted that “this in no way means that a language model is better than doctors in taking clinical history,” as there was a number of factors at play in the test. Some of the doctors may not have been used to diagnosing patients over a text-based chat, which may have affected their performance.

The study’s implications extend beyond diagnostics. As Daniel Ting, a clinician AI scientist at Duke–NUS Medical School in Singapore, points out, probing the system for biases is essential to ensuring that the algorithm is fair and inclusive. Ting also emphasized the importance of privacy and data handling in commercial large language models, stating, “For a lot of these commercial large language model platforms right now, we are still unsure where the data is being stored and how it is being analyzed.”

Read more at Nature here.

Lucas Nolan is a reporter for Breitbart News covering issues of free speech and online censorship.