Video game performers say the physical strain and hours put into motion capture and voice work make it worth protecting against artificial intelligence

Can AI truly replicate the screams of a man on fire? Video game performers want their work protectedBy SARAH PARVINIAP Technology WriterThe Associated PressLOS ANGELES

LOS ANGELES (AP) — For hours, motion capture sensors tacked onto Noshir Dalal’s body tracked his movements as he unleashed aerial strikes, overhead blows and single-handed attacks that would later show up in a video game. He eventually swung the sledgehammer gripped in his hand so many times that he tore a tendon in his forearm. By the end of the day, he couldn’t pull the handle of his car door open.

The physical strain this type of motion work entails, and the hours put into it, are part of the reason why he believes all video-game performers should be protected equally from the use of unregulated artificial intelligence.

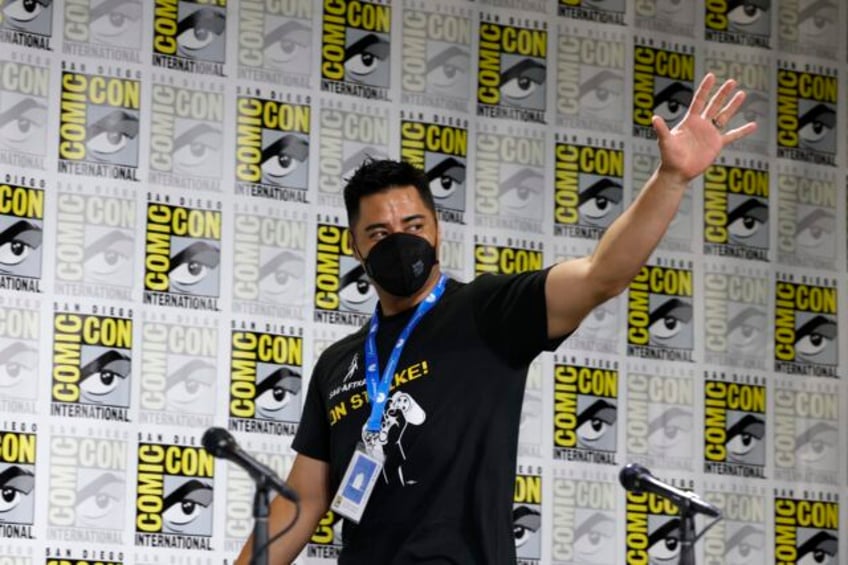

Video game performers say they fear AI could reduce or eliminate job opportunities because the technology could be used to replicate one performance into a number of other movements without their consent. That’s a concern that led the Screen Actors Guild-American Federation of Television and Radio Artists to go on strike in late July.

“If motion-capture actors, video-game actors in general, only make whatever money they make that day … that can be a really slippery slope,” said Dalal, who portrayed Bode Akuna in “Star Wars Jedi: Survivor.” “Instead of being like, ‘Hey, we’re going to bring you back’ … they’re just not going to bring me back at all and not tell me at all that they’re doing this. That’s why transparency and compensation are so important to us in AI protections.”

Hollywood’s video game performers announced a work stoppage — their second in a decade — after more than 18 months of negotiations over a new interactive media agreement with game industry giants broke down over artificial intelligence protections. Members of the union have said they are not anti-AI. The performers are worried, however, the technology could provide studios with a means to displace them.

Dalal said he took it personally when he heard that the video game companies negotiating with SAG-AFTRA over a new contract wanted to consider some movement work “data” and not performance.

If gamers were to tally up the cut scenes they watch in a game and compare them with the hours they spend controlling characters and interacting with non-player characters, they would see that they interact with “movers’” and stunt performers’ work “way more than you interact with my work,” Dalal said.

“They are the ones selling the world these games live in, when you’re doing combos and pulling off crazy, super cool moves using Force powers, or you’re playing Master Chief, or you’re Spider-Man swinging through the city,” he said.

Some actors argue that AI could strip less-experienced actors of the chance to land smaller background roles, such as non-player characters, where they typically cut their teeth before landing larger jobs. The unchecked use of AI, performers say, could also lead to ethical issues if their voices or likenesses are used to create content that they do not morally agree with. That type of ethical dilemma has recently surfaced with game “mods,” in which fans alter and create new game content. Last year, voice actors spoke out against such mods in the role-playing game “Skyrim,” which used AI to generate actors’ performances and cloned their voices for pornographic content.

In video game motion capture, actors wear special Lycra or neoprene suits with markers on them. In addition to more involved interactions, actors perform basic movements like walking, running or holding an object. Animators grab from those motion capture recordings and chain them together to respond to what someone playing the game is doing.

“What AI is allowing game developers to do, or game studios to do, is generate a lot of those animations automatically from past recordings,” said Brian Smith, an assistant professor at Columbia University’s Department of Computer Science. “No longer do studios need to gather new recordings for every single game and every type of animation that they would like to create. They can also draw on their archive of past animation.”

If a studio has motion capture banked from a previous game and wants to create a new character, he said, animators could use those stored recordings as training data.

“With generative AI, you can generate new data based on that pattern of prior data,” he said.

A spokesperson for the video game producers, Audrey Cooling, said the studios offered “meaningful” AI protections, but SAG-AFTRA’s negotiating committee said that the studios’ definition of who constitutes a “performer” is key to understanding the issue of who would be protected.

“We have worked hard to deliver proposals with reasonable terms that protect the rights of performers while ensuring we can continue to use the most advanced technology to create a great gaming experience for fans,” Cooling said. “We have proposed terms that provide consent and fair compensation for anyone employed under the (contract) if an AI reproduction or digital replica of their performance is used in games.”

The game companies offered wage increases, she said, with an initial 7% increase in scale rates and an additional 7.64% increase effective in November. That’s an increase of 14.5% over the life of the contract. The studios had also agreed to increases in per diems, payment for overnight travel and a boost in overtime rates and bonus payments, she added.

“Our goal is to reach an agreement with the union that will end this strike,” Cooling said.

A 2023 report on the global games market from industry tracker Newzoo predicted that video games would begin to include more AI-generated voices, similar to the voice acting in “High on Life” from Squanch Games. Game developers, the Amsterdam-based firm said, will use AI to produce unique voices, bypassing the need to source voice actors.

“Voice actors may see fewer opportunities in the future, especially as game developers use AI to cut development costs and time,” the report said, noting that “big AAA prestige games like ‘The Last of Us’ and ‘God of War’ use motion capture and voice acting similarly to Hollywood.”

Other games, such as “Cyberpunk 2077,” cast celebrities.

Actor Ben Prendergast said that data points collected for motion capture don’t pick up the “essence” of someone’s performance as an actor. The same is true, he said, of AI-generated voices that can’t deliver the nuanced choices that go into big scenes — or smaller, strenuous efforts like screaming for 20 seconds to portray a character’s death by fire.

“The big issue is that someone, somewhere has this massive data, and I now have no control over it,” said Prendergast, who voices Fuse in the game “Apex Legends.” “Nefarious or otherwise, someone can pick up that data now and go, we need a character that’s nine feet tall, that sounds like Ben Prendergast and can fight this battle scene. And I have no idea that that’s going on until the game comes out.”

Studios would be able to “get away with that,” he said, unless SAG-AFTRA can secure the AI protections they are fighting for.

“It reminds me a lot of sampling in the ‘80s and ’90s and 2000s where there were a lot of people getting around sampling classic songs,” he said. “This is an art. If you don’t protect rights over their likeness, or their voice or body and walk now, then you can’t really protect humans from other endeavors.”