The US Central Intelligence Agency's Open-Source Enterprise division will soon roll out with a ChatGPT-like large language model (LLM), which is to serve as a tool for federal and intelligence agencies to more easily and quickly access intel and information.

Director of the CIA's Open-Source Enterprise division, Randy Nixon, explained that source information can be sifted and returned to individual intel analysts faster than ever before. "We’ve gone from newspapers and radio, to newspapers and television, to newspapers and cable television, to basic internet, to big data, and it just keeps going," Nixon told Bloomberg.

"We have to find the needles in the needle field," he added. In addition to literally hundreds of thousands or millions of classified files, analysts often rely on gathering open-source information for their assessments as well. For example this could include culling public social media apps like Facebook or X.

"Then you can take it to the next level and start chatting and asking questions of the machines to give you answers, also sourced," Nixon continued. "Our collection can just continue to grow and grow with no limitations other than how much things cost."

He explained further that the AI platform will be available and used by Washington's 18 different intelligence branches, including federal law enforcement, such as the FBI. It's also expected that the US military will have access, though it remains that security protocols and preventing leaks will be a big question, given the vast amounts of classified materials which will be at the tool's disposal.

There's also the question of privacy, especially following the Edward Snowden revelations of a decade ago showing that the NSA had in prior years regularly swept up the data of innocent American citizens, violating their Fourth Amendment protections.

According to a prior Bloomberg report which questioned the NSA over the impact on privacy:

"The intelligence community needs to find a way to take benefit of these large models without violating privacy," Gilbert Herrera, director of research at the National Security Agency, said in an interview. "If we want to realize the full power of artificial intelligence for other applications, then we’re probably going to have to rely on a partnership with industry.”

"It all has to be done in a manner that respects civil liberties and privacy," Herrera claimed in that prior interview. "It’s a tough problem," she added, further admitting that "The issue of the intelligence community’s use of publicly trained information is an issue we’re going to have to grapple with because otherwise there would be capabilities of AI that we would not be able to use."

The future of artificial intelligence (AI) and machine learning (ML) is bright at #CIA. We’re always looking to build strong partnerships with the visionaries of today … and tomorrow.#CIASXSW #EmergingTech #FutureofIntelligence #ArtificialIntelligence #MachineLearning

— CIA (@CIA) March 13, 2023

But we highly doubt the US government's top intelligence officials will be overly concerned with "limiting" AI's power due to the Bill of Rights and concerns over individual privacy.

Another interesting aspect to the CIA working on its own version of ChatGPT is the question of competition with China's significant advances in AI. The new Bloomberg report highlights the mounting pressure US intelligence faces in the wake of more advanced Chinese capabilities. Beijing is looking to become the global leader in the AI field by 2030, and is already considered by many to be a world leader in the technology:

In an ominous glimpse into the nation's use of the programs, in 2021 China developed a 'prosecutor' that could identify and press charges with a reported 97 percent accuracy.

In contrast, America's law enforcement sphere has also come under fire for struggling to utilize the power of AI in investigations, but Nixon said the new program will aid in condensing the unprecedented levels of information floating through the web.

But it remains that in the West there is a much more robust legal concept of individual rights, free expression, and autonomy - compared to communist China. On this front, concerning a CIA-built AI chatbot, what could possibly go wrong?

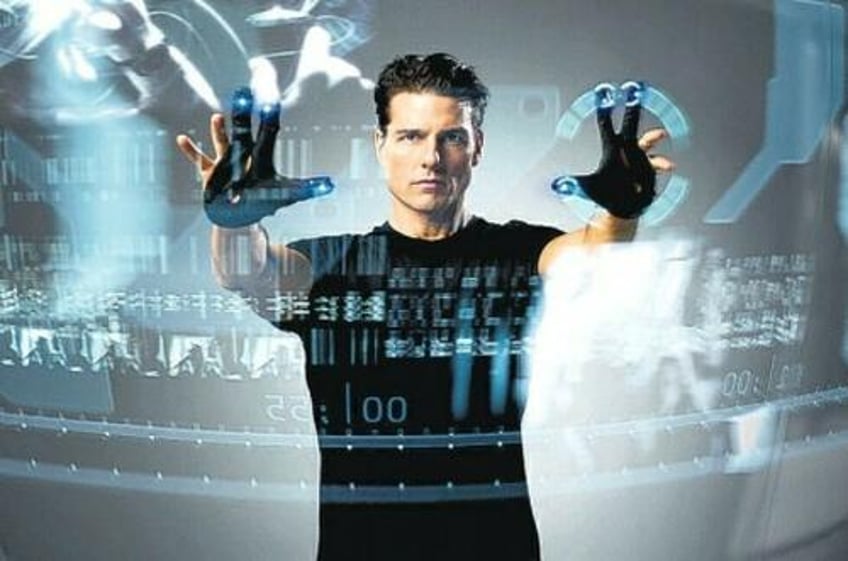

After all, the American public doesn't want to find itself living in a society modeled on "Minority Report" merely for the sake of 'keeping up' technologically with rival superpowers (however, in some ways we are already there).