A Cornell University research paper showed AI can steal passwords with up to 95% accuracy

Should we fear artificial intelligence? Connor Leahy touches on human skepticism of AI

Connor Leahy advises whether humans should be fearful of artificial intelligence and where AI is expected to be in the future

Artificial intelligence can steal passwords by "listening" to a user's keystrokes with unprecedented accuracy.

Cornell University published a report about U.K. scholars that trained an AI model on audio recordings of people typing, and it identified different sounds that each key made, according to a study published Aug. 3.

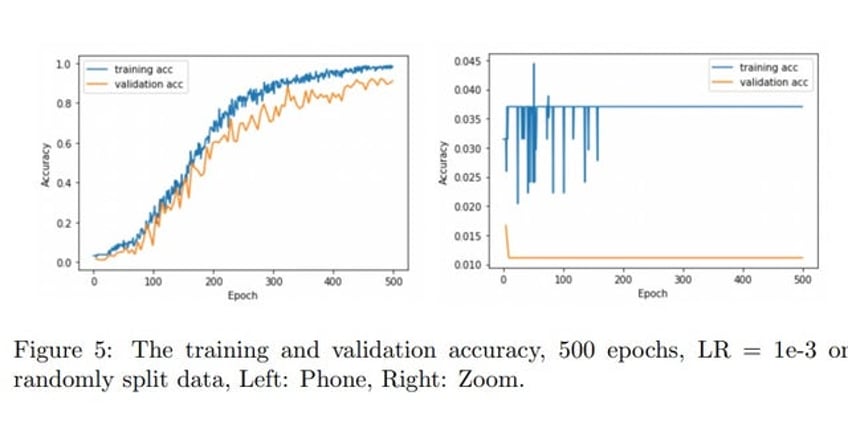

Researchers tested the model on a nearby phone's integrated microphone, placed 17 centimeters away, that listened for keystrokes on a 2021 MacBrook Pro and over Zoom and Skype calls.

The AI identified the password with accuracy rates of 95%, 93% and 91.7%, respectively, the research paper found.

Artificial intelligence can steal passwords with 95% accuracy, according to a study published by Cornell University that placed the microphone of an iPhone 17 centimeters away from a MacBrook Pro. (Cornell University)

"When typing a password, people will regularly hide their screen but will do little to obfuscate their keyboard’s sound," Cornell scholars wrote in their research paper.

"With the recent developments in both the performance of (and access to) both microphones and deep learning models, the feasibility of an acoustic attack on keyboards begins to look likely."

Each character produces a unique sound that can't be heard by the human ear but can be recognized and processed by the AI, according to the paper.

To test the accuracy of the program, the Cornell researchers pressed 36 laptops' keys 25 times while varying the pressure and finger used for each press.

The accuracy rate of matching passwords typed into MacBook Pro (left) and on Zoom (right). (Cornell University)

The AI not only "listened" to the sounds but utilized the "waveform, intensity and timing of each keystroke" to collect information and patterns.

Hackers using a device's sounds to gain access without the user knowing is called an "acoustic (sound)-based side-channel attack," and it's been around for decades.

NY POLICE USED AI TO TRACK DRIVER ON HIGHWAYS AS ATTORNEY QUESTIONS LEGALITY

What is an acoustic side-channel attack?

Acoustic side-channel attacks are cyberattacks that use unintended sound emissions or vibrations to gather sensitive information, according to republicworld.com.

The grandfather of this technology goes back to 1950s British spies, who utilized sound emanations of Hagelin encryption devices to hack into the Egyptian embassy, according to the study.

Similar attacks were discussed in a 1972 report written for America's National Security Agency.

READ FULL 1972 REPORT

How much concern exists and how to protect yourself

The heavy usage of smartphones and laptops creates a troubling issue.

"With regards to overall susceptibility to an attack, the quieter MacBook keystrokes may prove harder to record or isolate, however, once this obstacle is overcome, the keystrokes appear to be similarly if not more susceptible," the research paper warns.

Even slight differences in your typing style are considered by the AI.

"In real-life scenarios, this attack could happen through malware on a nearby device with a microphone. It would collect your keystroke data and use AI to decipher your passwords."

The study found changing typing styles could be sufficient to avoid an attack, and the use of the shift key is vital.

"While multiple methods succeeded in recognizing a press of the shift key, no paper in the surveyed literature succeeded in recognizing the ‘release peak’ of the shift key amidst the sounds of other keys," according to the study.

AI can listen to your keystrokes and guess your passwords and other sensitive information, according to a new Cornell study. (Kurt 'CyberGuy' Knutsson)

So, passwords that use both lowercase and uppercase letters are stronger.

The use of touchscreen keyboards present a "silent alternative" to keyboards or avoid typing passwords using features like Touch ID.

Chris Eberhart is a crime and US news reporter for Fox News Digital. Email tips to